Past winners

An invitation to give a Kolmogorov Lecture acknowledges life-long research contributions to one of the fields initiated or transformed by Kolmogorov.

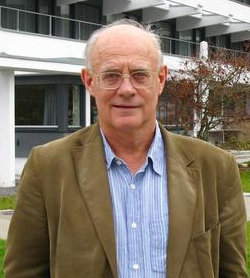

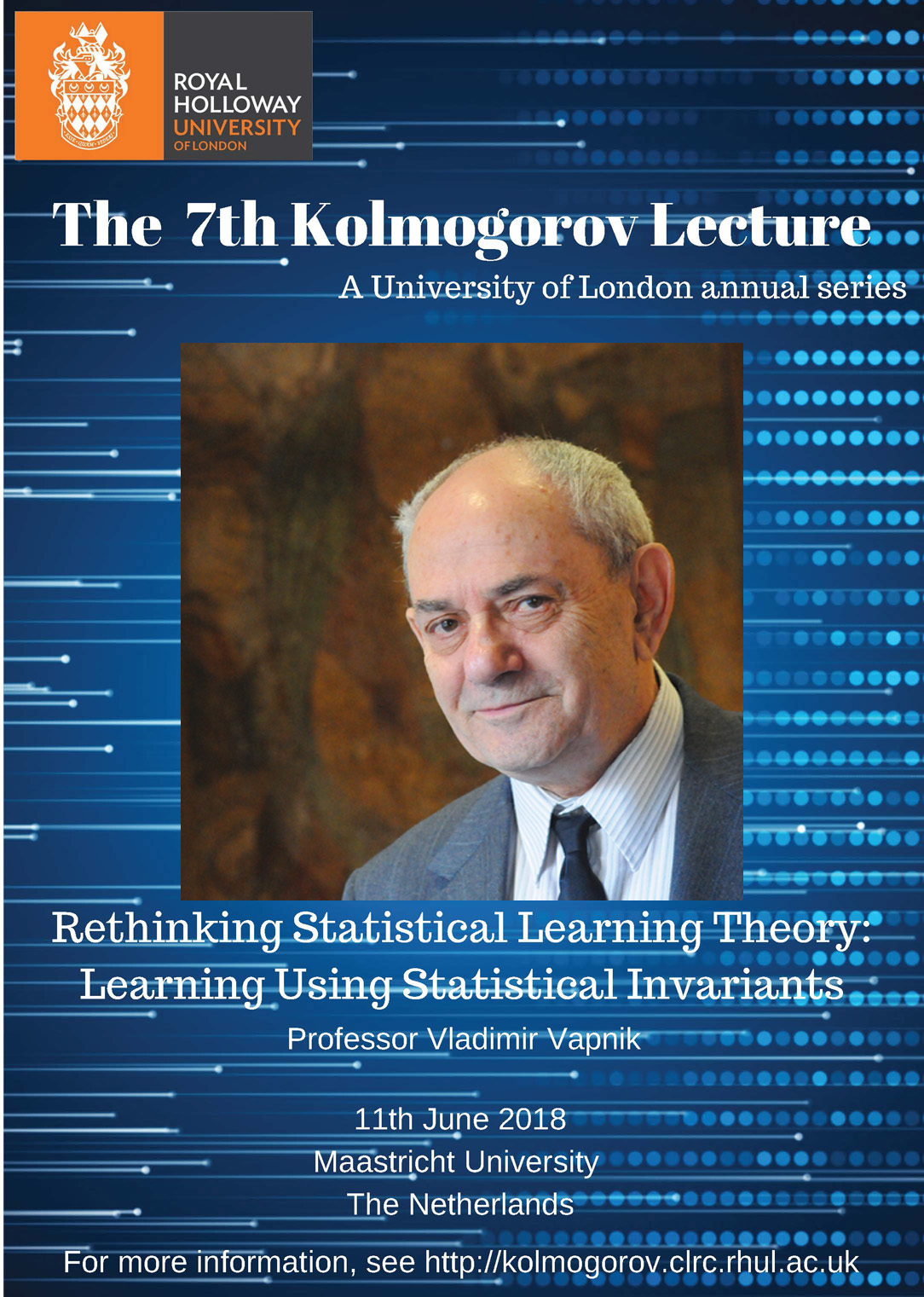

Professor Vladimir Vapnik

Prof. Vapnik is the inventor of the Vapnik–Chervonenkis theory of statistical learning, and the support vector machine method. and the support vector machine

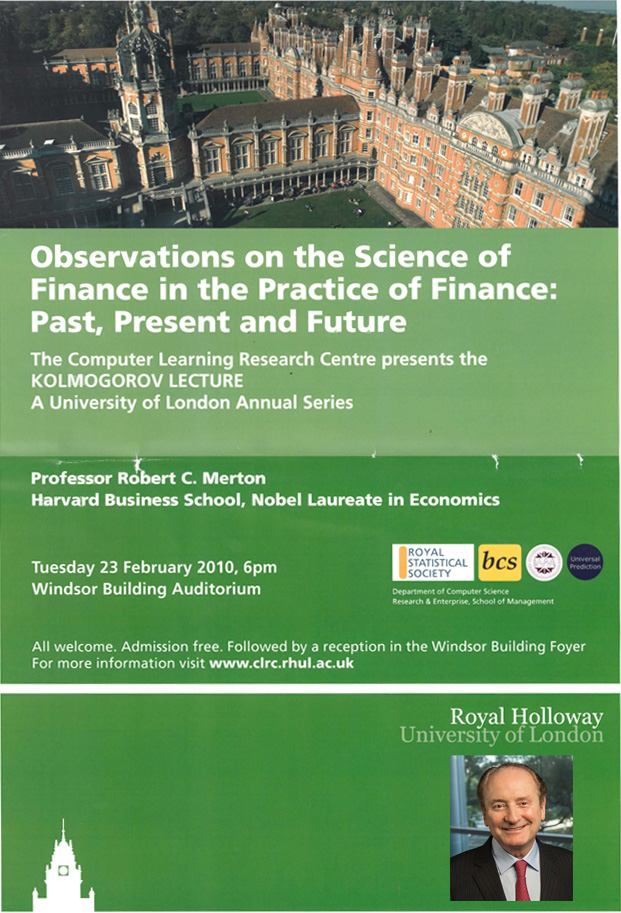

Professor Robert Merton

Prof. Merton won the Nobel Prize in Economic Sciences in 1997, for his pioneering contributions to continuous-time finance and the Black–Scholes formula.

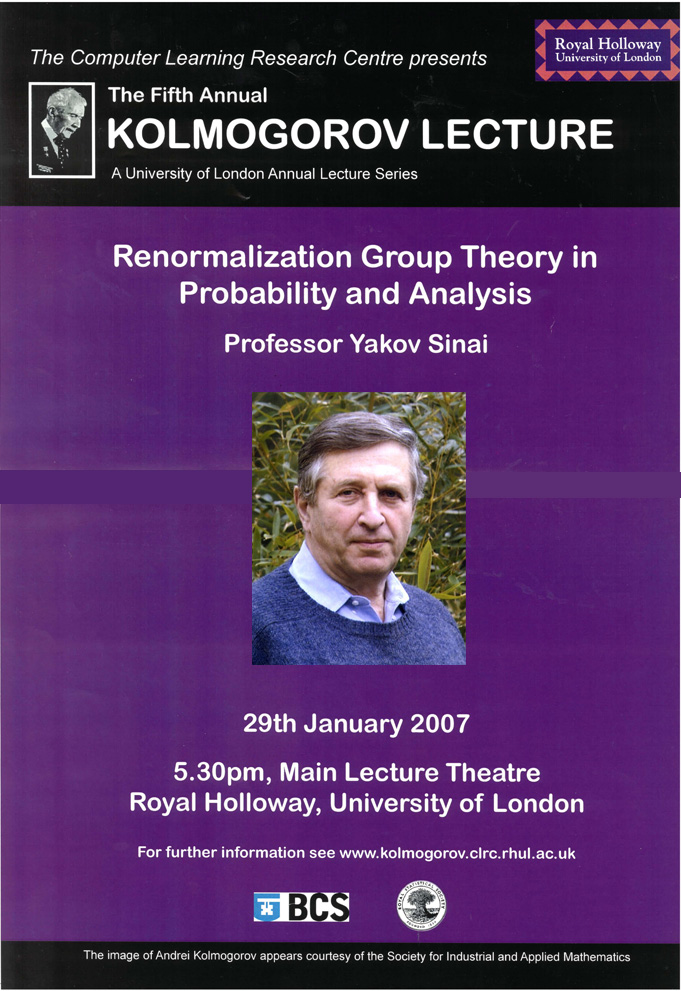

Professor Yakov Sinai

Prof. Sinai is well-known for his work on dynamical systems, which have provided the groundwork for advances in the physical sciences.

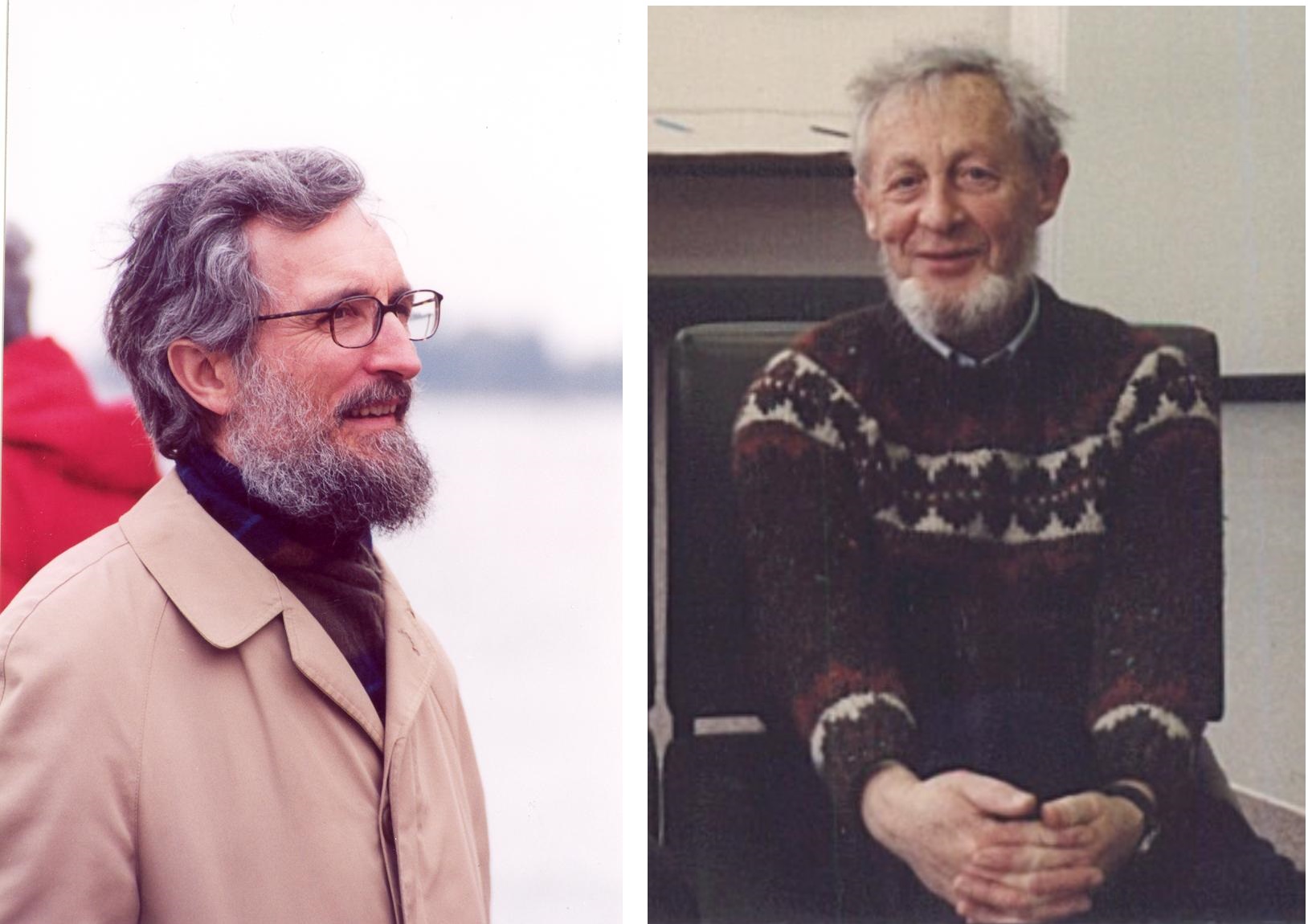

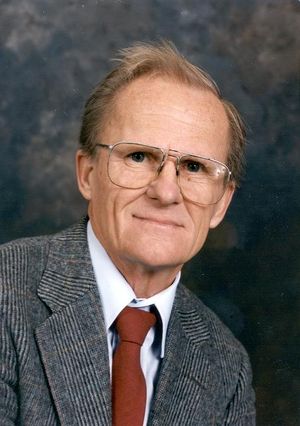

Professor Jorma Rissanen

Prof. Rissanen was the inventor of the minimum description length principle and practical approaches to arithmetic coding for lossless data compression.

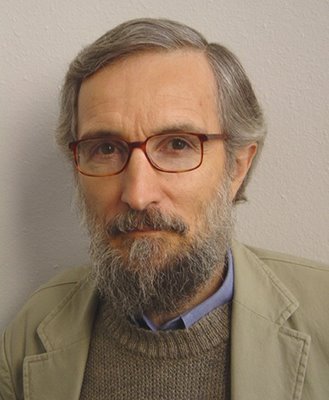

Professor Per Martin-Löf

Prof. Martin-Löf is internationally renowned for his work on the foundations of probability, statistics, mathematical logic, especially type theory which has influenced computer science.

Professor Leonid Levin

Prof. Levin is well-known for his work in randomness in computing, algorithmic complexity and intractability, foundations of mathematics and computer science.

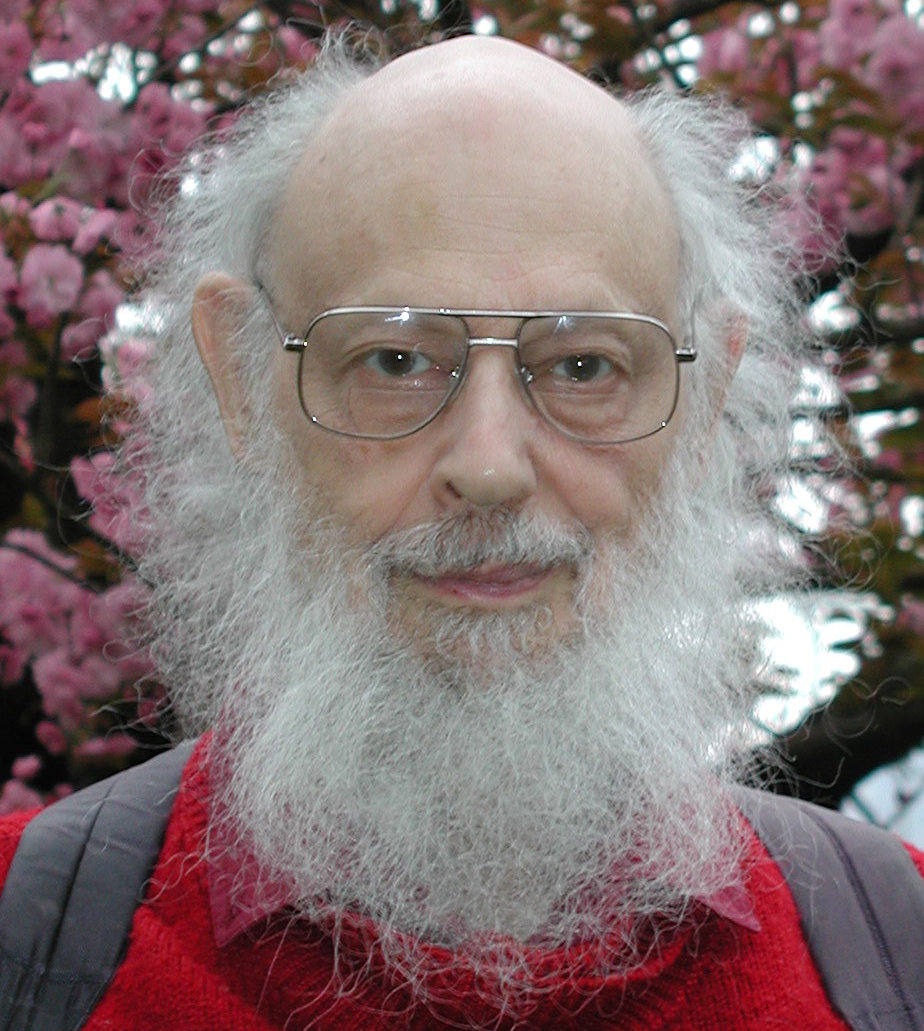

Professor Ray Solomonoff

Prof. Solomonoff was the inventor of algorithmic probability, his General Theory of Inductive Inference, and was a founder of algorithmic information theory.